Hi, my name is Mox!

This story begins in 2013, in a preschool in Boston, where I hide, with laptop, headphones, and microphone, in a little kitchenette. Ethernet cables trail across the hall to the classroom, where 17 children eagerly await their turn to talk to a small fluffy robot.

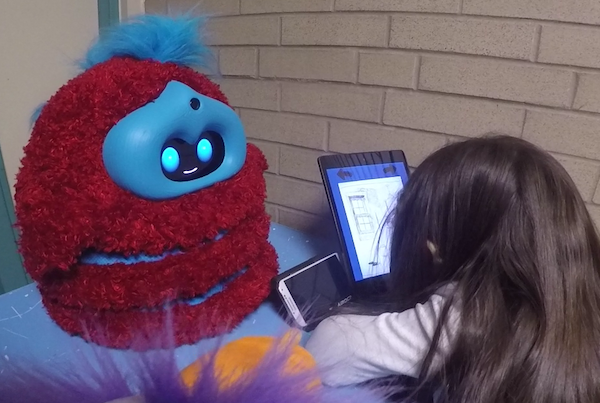

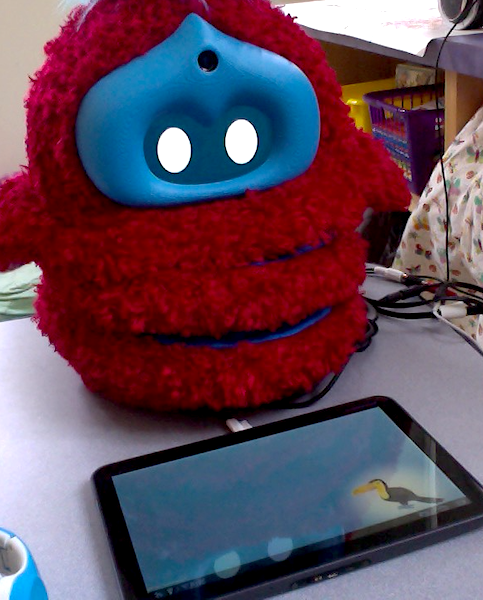

Dragonbot is a squash-and-stretch robot designed for playing with young children.

"Hi, my name is Mox! I'm very happy to meet you."

The pitch of my voice is shifted up and sent over the somewhat laggy network. My words, played by the speakers of Mox the robot and picked up by its microphone, echo back with a two-second delay into my headphones. It's tricky to speak at the right pace, ignoring my own voice bouncing back, but I get into the swing of it pretty quickly.

We're running show-and-tell at the preschool on this day. It's one of our pilot tests before we embark on an upcoming experimental study. The children take turns telling the robot about their favorite animals. The robot (with my voice) replies with an interesting fact about each animal, Did you know that capybaras are the largest rodents on the planet?" (Yes, one five-year-old's favorite animal is a capybara.) Later, we share how the robot is made and talk about motors, batteries, and 3D printers. We show them the teleoperation interface for remote-controlling the robot. All the kids try their hand at triggering the robot's facial expressions.

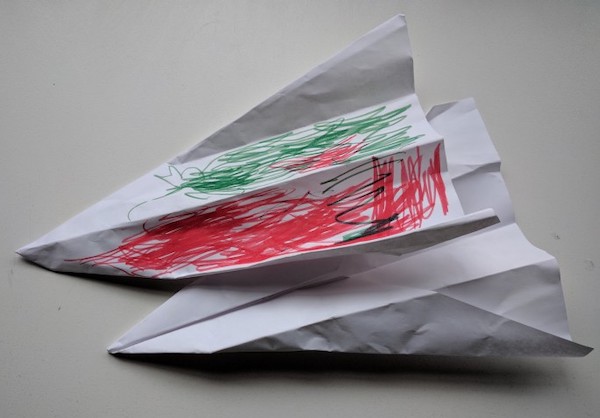

Then one kid asks if he can teach the robot how to make a paper airplane.

Two paper airplanes that a child gave to DragonBot.

We'd just told them all how the robot was controlled by a human. I ask: Does he want to teach me how to make a paper airplane?

No, the robot, he says.

Somehow, there was a disconnect between what he had just learned about the robot and the robot's human operator, and the character that he perceived the robot to be.

Relationships with robots?

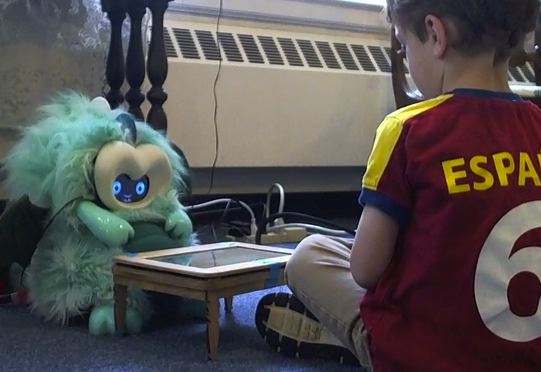

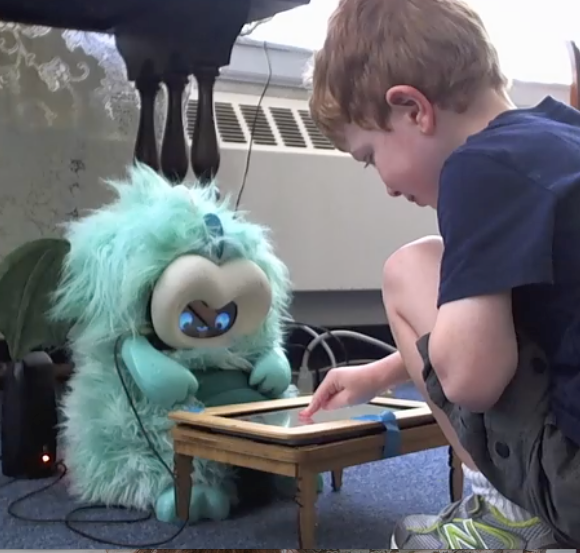

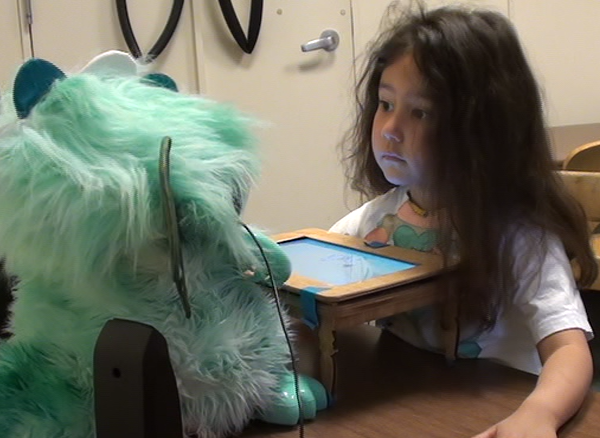

A child touches Tega's face while playing a language learning game.

In the years since that playtest, I've watched several hundred children interact with both teleoperated and autonomous robots. The children talk with the robots. They laugh. They give hugs, drawings, and paper airplanes. One child even invited the robot to his preschool's end-of-year picnic.

Mostly, though, I've seen kids treat the robots as social beings. But not quite like how they treat people. And not quite like how they treat pets, plants, or computers.

These interactions were clues: There's something interesting going on here. Children ascribed physical attributes to robots—they can move, they can see, they can feel tickles—but also mental attributes: thinking, feeling sad, wanting companionship. A robot could break, yes, and it is made by a person, yes, but it can be interested in things. It can like stories; it can be nice. Maybe, as one child suggested, if it were sad, it would feel better if we gave it ice cream.

A child listens to DragonBot tell a story during one of our research studies.

Although our research robots aren't commercially available, investigating how children understand robots isn't merely an academic exercise. Many smart technologies are joining us in our homes: Roomba, Jibo, Alexa, Google Home, Kuri, Zenbo...the list goes on. Robots and AI are here, in our everyday lives.

We ought to ask ourselves, what kinds of relationships do we want to have with them? Because, as we saw with the children in our studies, we will form relationships with them.

We see agency everywhere

One reason we can't help ourselves from forming relationships with robots is that humans have evolved to see agency and intention everywhere. If an object moves independently in an apparently goal-directed way, we interpret that as agency—that is, we see the object as an agent. Even in something as simple as a couple of animated triangles moving around on a screen, we look for, and project, agency and intentionality.

If you think about the theory of evolution, this makes sense. Is the movement I spotted out of the corner of my eye just a couple leaves dancing in the breeze, or is it a tiger? My survival relies on thinking it's a tiger.

But relationships aren't built on merely recognizing other agents; relationships are social constructs. And, humans are uniquely—unequivocally—social creatures. Social is the warp and weft of our lives. Everything is about our interactions with others: people, pets, characters in our favorite shows or books, even our plants or our cars. We need companionship and community to thrive. We pay close attention to social cues—like eye gaze, emotions, politeness—whether these cues come from a person...or from a machine.

Researchers have spent the past 25 years showing that humans respond to computers and machines as if those objects were people. There's even a classic book, published by Byron Reeves and Clifford Nass in 1996, titled, The Media Equation: How people treat computers, television, and new media like real people and places. Among their findings: people assign personalities to digital devices, people are polite to computers—for example, they evaluate a computer more positively when they had to tell it to its face. Merely telling people a computer was on their team leads them to rate it as more cooperative and friendly.

Research since that book has shown again and again that these findings still hold: Humans treat machines as social beings. And this brings us back to my work now.

Designing social robots to help kids

I'm a PhD student in the Personal Robots Group. We work in the field of human-robot interaction (HRI). HRI studies questions, such as: How do people think about and react to robots? How can we make robots that will help people in different areas of their lives—like manufacturing, healthcare, or education? How do we build autonomous robots—including algorithms for perception, social interaction, and learning? At the broadest scale, HRI encompasses anything where humans and robots come into contact and do things with, or near, each other.

Look, we match!

As you might guess based on the anecdotes I've shared in this post, the piece of HRI I'm working on is robots for kids.

There are numerous projects in our group right now focusing on different aspects of this: robots that help kids in hospitals, robots that help kids learn programming, robots that promote curiosity and a growth mindset, robots that help kids learn language skills.

In my research, I've been asking questions like: Can we build social robots that support children's early language and literacy learning? What design features of the robots affect children's learning—like the expressivity of the robot's voice, the robot's social contingency, or whether it provides personalized feedback? How, and what, do children think about these robots?

Will robots replace teachers?

When I tell people about the Media Lab's work with robots for children's education, a common question is: "Are you trying to replace teachers?"

To allay concerns: No, we aren't.

(There are also some parents who say that's nice, but can you build us some robot babysitters, soon, pretty please?)

We're not trying to replace teachers for two reasons:

- We don't want to.

- Even if we wanted to, we couldn't.

Teachers, tutors, parents, and other caregivers are irreplaceable. Despite all the research that seems to point to the conclusion "robots can be like people", there are also studies showing that children learn more from human tutors than from robot tutors. Robots don't have all the capabilities that people do for adapting to a particular child's needs. They have limited sensing and perception, especially when it comes to recognizing children's speech. They can't understand natural language (and we're not much closer to solving the underlying symbol grounding problem). So, for now, as often as science fiction has us believe otherwise (e.g., androids, cylons, terminators, and so on), robots are not human.

Even if we eventually get to the point where robots do have all the necessary human-like capabilities to be like human teachers and tutors—and we don't know how far in the future that would be or if it's even possible—humans are still the ones building the robots. We get to decide what we build. In our lab, we want to build robots that help humans and support human flourishing. That said, saying that we want to build helpful robots only goes so far. There's still more work to ensure that all the technology we build is beneficial, and not harmful, for humans. More on that later in this post.

A mother reads a digital storybook with her child.

The role we foresee for robots and similar technologies is complementary: they are a new tool for education. Like affective pedagogical agents and intelligent tutoring systems, they can provide new activities and new ways of reaching kids. The teachers we've talked to in our research are excited about the prospects. They've suggested that the robot could provide personalized content, or connect learning in school to learning at home. We think robots could supplement what caregivers already do, support them in their efforts, and scaffold or model beneficial behaviors that caregivers may not know to use, or may not be able to use.

For example, one beneficial behavior during book reading is asking dialogic questions—that is, questions that prompt the child to think about the story, predict what might happen next, and engage more deeply with the material. Past work from our group has shown that when you add a virtual character to a digital storybook who models this dialogic questioning, it can help parents learn what kinds of questions they can ask, and remember to ask them more often.

In another Media Lab project, Natalie Freed—an alum of our group—made a simple vocabulary-learning game with a robot that children and their parents played together. The robot's presence encouraged communication and discussion. Parents guided and reinforced children's behavior in a way that aligned with the language learning goals of the game. Technology can facilitate child-caregiver interactions.

In summary, in the Personal Robots Group, we want our robots to augment existing relationships between children and their families, friends, and caregivers. Robots aren't human, and they won't replace people. But they will be robots.

Robots are friends—sort of?

In our research, we hear a lot of children's stories. Some are fictional: tales of penguins and snowmen, superheroes and villains, animals playing hide-and-seek and friends playing ball. Some are real: robots who look like rock stars, who ask questions and can listen, who might want ice cream when they're sad.

Such stories can tell you a lot about how children think. And we've found that not only will children learn new words and tell stories with robots, they think of the robots as active social partners.

In one study, preschool children talked about their favorite animals with two DragonBots, Green and Yellow. One robot was contingently responsive: it nodded and smiled at all the right times. The other was just as expressive, but not contingent—you might be talking, and it might be looking behind you, or it might interrupt you to say "mmhm!", rather than waiting until a pause in your speech.

Two DragonBots, ready to play!

Children were especially attentive to the more contingent robot, spending more time looking at it. We also asked children a couple questions to test whether they thought the robots were equally reliable informants. We showed children a new animal and asked them, "Which robot do you want to ask about this animal's name?" Children chose one of the robots.

But then each robot provided a different name! So we asked: "Which robot do you believe?" Regardless of which robot they had initially chosen (though most chose the contingent robot), almost all the children believed the contingent robot.

This targeted information seeking is consistent with previous psychology and education research showing that children are selective in choosing whom to question or endorse. They use their interlocutor's nonverbal social cues to decide how reliable that person is, or how reliable that robot is.

Then we performed a couple other studies to learn about children's word learning with robots. We found that here, too, children paid attention to the robot's social cues. As in their interactions with people, children followed the robot's gaze and watched the robot's body orientation to figure out which objects the robot was naming.

We looked at longer interactions. Instead of playing with the robot once, children got to play seven or eight times. For two months, we observed children take turns telling stories with a robot. Did they learn? Did they stay engaged, or get bored? The results were promising: The children liked telling their own stories to the robot. They copied parts of the robot's stories—borrowing characters, settings, and even some of the new vocabulary words that the robot had introduced.

We looked at personalization. If you have technology, after all, one of the benefits is that you can customize it for individuals. If the robot "leveled" its stories to match the child's current language abilities, would that lead to more learning? If the robot personalized the kinds of motivational strategies it used, would that increase learning or engagement?

A girl looks up at DragonBot during a storytelling game.

Again and again, the results pointed to one thing: Children responded to these robots as social beings. Robots that acted more human-like—being more expressive, being responsive, personalizing content and responses—led to more engagement and learning by the children; even how expressive the robot's voice was mattered. When we compared a robot that had a really expressive voice to one that had a flat, boring voice (like a classic text-to-speech computer voice), we saw that with the expressive robot, children were more engaged, remembered the story more accurately, and used the key vocabulary words more often.

All these results make sense: There's a lot of research showing that these kinds of "high immediacy" behaviors are beneficial for building relationships, teaching, and communicating.

Beyond learning, we also looked at how children thought and felt about the robot.

We looked at how the robot was introduced to children: If you tell them it's a machine, rather than introducing it as a friend, do children treat the robot differently? We didn't see many differences. In general, children reacted in the moment to the social robot in front of them. You could say "it's just a robot, Frank," but like the little boy I mentioned earlier who wanted to teach the robot how to make a paper airplane, they didn't really get the distinction.

Or maybe they got it just fine, but to them, what it means to be a robot is different from what we adults think it means to be a robot.

Across all the studies, children claimed the robot was a friend. They knew it couldn't grow or eat like a person, but—as I noted earlier—they happily ascribed it with thinking, seeing, feeling tickles, and being happy or sad. They shared stories and personal information. They taught each other skills. Sure, the kids knew that a person had made the robot, and maybe it could break, but the robot was a nice, helpful character that was sort of like a person and sort of like a computer, but not really either.

And there was that one child who invited the robot to a picnic.

For children, the ontologies we adults know—the categories we see as clear-cut—are still being learned. Is something being real, or is it pretending? Is something a machine, or a person? Maybe it doesn't matter. To a child, someone can be imaginary and still be a friend. A robot can be in-between other things. It can be not quite a machine, not quite a pet, not quite friend, but a little of each.

But human-robot relationships aren't authentic!

One concern some people have when talking about relationships with social robots is that the robots are pretending to be a kind of entity that they are no—namely, an entity that can reciprocally engage in emotional experiences with us. That is, they're inauthentic (PDF): they provoke undeserved and unreciprocated emotional attachment, trust, caring, and empathy.

But why must reciprocality be a requirement for a significant, authentic relationship?

People already attach deeply to a lot of non-human things. People already have significant emotional and social relationships that are non-reciprocal: pets, cars, stuffed animals, favorite toys, security blankets, and pacifiers. Fictional characters in books, movies, and TV shows. Chatbots and virtual therapists, smart home devices, and virtual assistants.

A child may love their dog, and you may clearly see that the dog "loves" the child back, but not in a human-like way. We aren't afraid that the dog will replace the child's human relationships. We acknowledge that our relationships with our pets, our friends, our parents, our siblings, our cars, and our favorite fictional characters are all different, and all real. Yet the default assumption is generally that robots will replace human relationships.

If done right (more on that in a moment), human-robot relationships could just be one more different kind of relationship.

So we can make relational robots? Should we?

When we talk about how we can make robots that have relationships with kids, we also have to ask one big lurking question:

Should we?

Social robots have a lot of potential benefits. Robots can help kids learn; they can be used in therapy, education, and healthcare. How do we make sure we do it "right"? What guiding principles should we follow?

How do we build robots to help kids in a way that's not creepy and doesn't teach kids bad behaviors?

I think caring about building robots "right" is a good first step, because not everybody cares, and because it's up to us. We humans build robots. If we want them not to be creepy, we have to design and build them that way. If we want socially assistive robots instead of robot overlords, well, that's on us.

Tega says, 'What do you want to do tonight, DragonBot?' Dragonbot responds, 'The same thing we do every night, Tega! Try to take over the world!'

Fortunately, there's growing international interest in many disciplines for in-depth study into the ethics of placing robots in people's lives. For example, the Foundation for Responsible Robotics is thinking about future policy around robot design and development. The IEEE Standards Association has an initiative on ethical considerations for autonomous systems. The Open Roboethics initiative polls relevant stakeholders (like you and me) about important ethical questions to find out what people who aren't necessarily "experts" think: Should robots make life or death decisions? Would you trust a robot to take care of your grandma? There are an increasing number of workshops on robot policy and ethics at major robotics conferences—I've attended some myself. There's a whole conference on law and robots.

The fact that there's multidisciplinary interest is crucial. Not only do we have to care about building robots responsibly, but we also have to involve a lot of different people in making it happen. We have to work with people from related industries who face the same kinds of ethical dilemmas because robots aren't the only technology that could go awry.

We also have to involve all the relevant stakeholders—a lot more people than just the academics, designers, and engineers who build the robots. We have to work with parents and children. We have to work with clinicians, therapists, teachers. It may sound straightforward, but it can go a long way toward making sure the robots help and support the people they're supposed to help and support.

We have to learn from the mistakes made by other industries. This is a hard one, but there's certainly a lot to learn from. When we ask if robots will be socially manipulative, we can see how advertising and marketing have handled manipulation, and how we can avoid some of the problematic issues. We can study other persuasive technologies and addictive games. We can learn about creating positive behavior change instead. Maybe, as was suggested at one robot ethics workshop, we could create "warning labels" similar to nutrition labels or movie ratings, which explain the risks of interacting with particular technologies, what the technology is capable of, or even recommended "dosage", as a way of raising awareness of possible addictive or negative consequences.

For managing privacy, safety, and security, we can see what other surveillance technologies and internet of things devices have done wrong—such as not encrypting network traffic and failing to inform users of data breaches in a timely manner. Manufacturing already has standards for "safety by design" so could we create similar standards for "security by design"? We may need new regulations regarding what data can be collected, for example, requiring warrants to access any data from inside homes, or HIPAA-like protections for personal data. We may need roboticists to adopt an ethical code similar to the codes professionals in other fields follow, but one that emphasizes privacy, intellectual property, and transparency.

There are a lot of open questions. If you came into this discussion with concerns about the future of social robots, I hope I've managed to address them. But I'll be the first to tell you that our work is not even close to being done. There are many other challenges we still need to tackle, and opening up this conversation is an important first step. Making future technologies and robot companions beneficial for humans, rather than harmful, is going to take effort.

It's a work in progress.

Keep learning, think carefully, dream big

We're not done learning about robot ethics, designing positive technologies, or children's relationships with robots. In my dissertation work, I ask questions about how children think about robots, how they relate to them through time, and how their relationships are different from relationships with other people and things. Who knows: we may yet find that children do, in fact, realize that robots are "just pretending" (for now, anyway), but that kids are perfectly happy to suspend disbelief while they play with those robots.

As more and more robots and smart devices enter our lives, our attitudes toward them may change. Maybe the next generation of kids, growing up with different technology, and different relationships with technology, will think this whole discussion is silly because of course robots take whatever role they take and do whatever it is they do. Maybe by the time they grow up, we'll have appropriate regulations, ethical codes, and industry standards, too.

And maybe—through my work, and through opening up conversations about these issues—our future robot companions will make paper airplanes with us, attend our picnics, and bring us ice cream when we're sad.

Miso the robot looks at a bowl of ice cream.

If you'd like to learn more about the topics in this post, I've compiled a list of relevant research and helpful links!

—

This article originally appeared on the MIT Media Lab website, June 2017

Acknowledgments:

The research I talk about in this post involved collaborations with, and help from, many people: Cynthia Breazeal, Polly Guggenheim, Sooyeon Jeong, Paul Harris, David DeSteno, Rosalind Picard, Edith Ackermann, Leah Dickens, Hae Won Park, Meng Xi, Goren Gordon, Michal Gordon, Samuel Ronfard, Jin Joo Lee, Nick de Palma, Siggi Aðalgeirsson, Samuel Spaulding, Luke Plummer, Kris dos Santos, Rebecca Kleinberger, Ehsan Hoque, Palash Nandy, David Nuñez, Natalie Freed, Adam Setapen, Marayna Martinez, Maryam Archie, Madhurima Das, Mirko Gelsomini, Randi Williams, Huili Chen, Pedro Reynolds-Cuéllar, Ishaan Grover, Nikhita Singh, Aradhana Adhikari, Stacy Ho, Lila Jansen, Eileen Rivera, Michal Shlapentokh-Rothman, Ryoya Ogishima.

This research was supported by an MIT Media Lab Learning Innovation Fellowship and by the National Science Foundation. Any opinions, findings and conclusions, or recommendations expressed in this paper are those of the authors and do not represent the views of the NSF.